Earlier this month, Apple regained its position as the largest company in the world (although since then has been duking it out with Microsoft and now even Nvidia).

Ever since ChatGPT pierced the public’s consciousness, Apple was regarded as an AI laggard, given its relative silence on a game plan for generative AI. But on the 10th of June Apple broke its silence, outlining its plan to the multitudes at its annual World Wide Developer Conference (WWDC): For its 2 billion active users, it will be Apple Intelligence. That is, artificial Intelligence, but Apple-flavoured.

Apple’s demos at WWDC demonstrated a very compelling use of generative AI, and one which takes a different approach to the run of AI applications being developed by large corporations including OpenAI, Google and others. The company also made clear that it was delivering these capabilities not by leveraging OpenAI (which was widely speculated), but instead with its own internally-developed models.

With all of this, Apple aims to give Siri the intelligence it has lacked for the last 14 years.

What can Apple Intelligence do?

Apple isn’t promising to deliver Artificial General Intelligence (like so many others) and is instead showing how the use of smaller models on-device can make people’s everyday lives much easier.

Receive an email from a colleague about a last minute meeting, but you know that you have another commitment that is somewhere in your messages, but not in your calendar? Siri has you sorted:

There are various other features, too, such as writing assistance, image creation and yes, customisable emojis.

Contrary to rumours of an Apple-OpenAI tie-up, the ChatGPT maker ended up taking a back seat for the most part, with its technology only being used as a last resort for queries too difficult to address with Apple’s on-device or private cloud models (more on this later).

According to Apple, 99% of the AI functionality is a result of internal AI endeavours. The company’s ~3 billion parameter small language model (SLM), which it benchmarks against Microsoft’s Phi, is the bedrock of their AI strategy. For more on the shrinkage of LLMs to fit on devices read our February post.

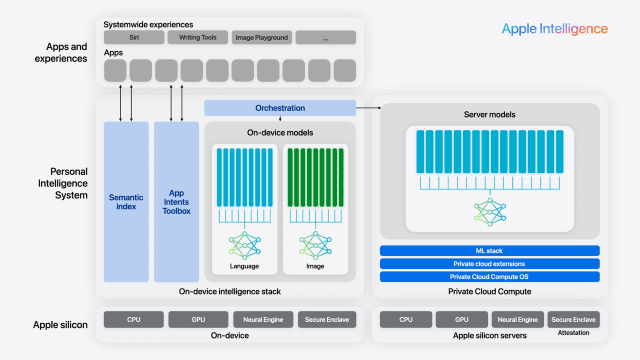

So how is it that Apple can deliver so much utility with a model 300x smaller than that of OpenAI? The simple answer is it relies on information it already has about the user – context – which provides the guardrails necessary to ensure accurate and relevant answers.

The model focuses on common tasks which can be solved on-device

Source: Apple

Alone, a 3 billion parameter model will be less accurate than a trillion parameter model like ChatGPT, but when used in combination with on-device contextual data – messages, calendar events, emails, contacts and more – the output can be even more useful in many use cases. This data is what Apple refers to as the Semantic Index. Apple’s demos made clear that owning the operating system is an enormous competitive advantage when it comes to delivering utility to consumers through generative AI.

The same goes for Google and its control of Android and Microsoft and its control of Windows. Google recently announced an event in August that will launch its latest Pixel smartphone, which no doubt will be packed full of AI features.

But for Apple, the benefits of the on-device model are two-fold.

First, by keeping a majority of the processing on the device itself, there are no privacy concerns in terms of taking personal information from the phone and sending it to a third-party cloud for processing.

For more complex queries requiring extra grunt, Apple is building out its own private cloud – Private Cloud Compute. Apple’s cloud will be based on its M-series architecture of chips – much less efficient than Nvidia GPUs, but cheaper, privacy-safe and also running the same operating system as the iPhone or Mac on which the query is generated.

Only once it’s been decided that the query is too complex for either Apple Intelligence or Private Cloud Compute will the user be asked whether to send it to OpenAI’s ChatGPT.

So Apple Intelligence for all? Not so fast…

Apple Intelligence will gradually be rolled out later this year, with some features coming as late as 2025. There are both services and device sales implications to consider.

AI functionality will likely lead to new services revenue. With a more functional Siri comes greater user engagement and the opportunity for Apple to put its own or third-party applications in front of those users. This has the potential to drive subscription or even advertising sales.

On the hardware front, perhaps most interesting is that Apple Intelligence will only be available on the iPhone 15 Pro and Pro Max as well as PCs with at least an M1 processor (which was when the Neural Processing Unit – NPU – was first introduced). But before earlier generation owners grab their torches and pitchforks, this is a hardware reality and (incidentally!) a cash grab. The requirements are a processor with an NPU and at least 8GB of random-access memory (RAM). Once again, semiconductors have become the bottleneck.

Even a 3 billion parameter SLM takes up a lot of space, and must be stored in RAM so that it is as close to the processing power as possible. Some experts peg the RAM requirements of Apple’s SLM at around 3GB, and that’s before the operating system itself is accounted for. This is why the iPhone 15 and iPhone 15 Plus miss the Apple Intelligence boat, as they only have 6GB of RAM versus the 8GB of the Pro models.

So as to ensure all new iPhone buyers have access to Apple Intelligence, it is reasonable to assume the company will make all future models of the iPhone with at least 8GB of RAM, if not more.

Good for suppliers to Apple – and other smartphone/PC makers

The run up in Apple’s share price was a recognition by the market that Apple wasn’t behind in AI, but also that Apple Intelligence could drive users to upgrade their devices sooner – a smartphone and PC “upgrade super-cycle”. This doesn’t seem out of the question.

But Apple won’t be the only beneficiary from more sales of its smartphones and PCs. The step up in RAM content will be great for leading memory players in the portfolio Micron and Samsung. Historically, growth in the memory content of smartphones and PCs has been gradual, but Apple Intelligence necessitates a step-change. Other componentry suppliers Broadcom (for Wi-Fi) and Qualcomm (5G) will also naturally benefit from higher device sales.

Meanwhile, Apple’s competitors aren’t standing still. Google is incorporating generative AI features to its Android smartphone operating system and Microsoft to its Windows Copilot+ PCs (which require 16GB of RAM). Memory requirements are going up for all devices.

Better, more complex processors with NPUs are also required. Qualcomm is the leading supplier of smartphone processors in non-Apple smartphones, and the processor behind the surprisingly long battery life of Microsoft’s Copilot+ PCs.

Behind the fabrication of these increasingly complex processors from Apple and Qualcomm is Taiwan Semiconductor Manufacturing Company (TSMC). This increase in volume will no doubt be a tailwind for the company. And despite the meteoric rise of demand for Nvidia chips, the GPU manufacturer is only the second largest TSMC customer, according to Bloomberg estimates, clocking in at ~11%. Apple is estimated at ~25%.

So Apple remains the big dog out there in the techworld – the biggest buyer of chips, one of the biggest ecosystems of users and devices, and now also with a tailor-made AI strategy, gliding over all. Apple may no longer be the world’s largest company, but it’s showing why it may deserve a place at the top.

Share this Post