Source: Shutterstock

Powerful forces are at work. The US Federal Reserve is losing the argument for higher interest rates following six months of better inflation data. Artificial intelligence companies have captured the public’s imagination, not because of the important ground-breaking work they have been doing for years in fields as diverse as protein folding and computational lithography, but because they can summarise a website – something everybody can do. And Zuck’s Meta has demonstrated the importance of a fundamental principle of web companies: the network effect matters – really matters. It launched a Twitter competitor which got to 100m users in five days. More on this later.

Market rally continues

The Loftus Peak Global Disruption Fund grew +4.1% net-of-fees during June, as markets continued digesting the opportunity in AI and the economy proved more resilient than expected in the face of rising interest rates. Read our June Fund update here for returns over longer periods.

The returns generated have been underpinned by gains in long-held semiconductor and artificial intelligence companies including Advanced Micro Devices (AMD), Nvidia, Arista and Marvell Technology as well as hyperscalers including Microsoft and Amazon. Netflix too contributed as competition in streaming literally crumbled (starting with Disney and Paramount, but it’s much broader than just that.)

But let us be clear: it’s an AI rally that is driving the secular outperformance in the portfolios we manage, with stable interest rates the necessary backdrop.

In this very early stage of generative AI, the silicon tool required to train up the large language models (LLMs) that underpin the likes of OpenAI’s ChatGPT and Google’s Bard is the graphics processing units (GPU). Nvidia’s current dominance of the GPU market and the software stack to make GPUs do useful work makes the company the AI darling of the moment.

As LLMs and other smaller, more specialised models come into being, we expect a subsequent boom in AI inference, where GPUs will compete with other chips such as central processing units (CPUs) and field programmable gate arrays (FPGAs) to do the work of drawing results out of the previously trained models.

Microsoft recently announced pricing for its AI services. Its Microsoft 365 enterprise bundle that includes the Office products, for example, adds an AI Copilot to popular productivity applications such as Word, Excel and Teams for an additional $30 per seat per month. A Copilot-assisted user query here could be something as simple as booking a meeting or restaurant or more complex, like constructing a presentation based on multiple company documents.

Framing the AI opportunity

Over the past month, there has been a flurry of reporting on the size and timing of the AI opportunity.

C.J. Muse, a highly respected analyst from broker Evercore, modeled the build-out of capacity for artificial intelligence. His commentary puts Nvidia’s datacentre segment revenues at US$114b by 2027, effectively ~8x Nvidia’s 2022 revenue of US$15b.

For a big company, already one of the world’s biggest, this is unheard-of growth – a point that has been made in commentary by others many times over.

Another piece of analysis came from US research-only company Bernstein which suggests that at 1 billion AI queries a day, around US$10b-US$20b of GPU’s per year would be required. 1 billion AI queries represents 12% of Google’s daily search traffic, a number we mention for context only.

It’s not hard to believe the ~1 billion knowledge workers globally might utilise a copilot at least 10 times a day (more than 10x the 1b AI queries previously mentioned). But that’s just the highly visible bit, not the datacentre grade offering in the Azure Cloud.

What to make of all this

Is all or any of this momentum behind AI credible? Difficult to know. What we are moderately certain about is that there is a groundswell of curiosity and investment by companies large and small to gauge specifically what AI has to offer.

For example, Wipro, one of India’s top providers of software services, wants everyone on staff to know how to use artificial intelligence and has announced a US$1billion investment to do so. It joins the big four accounting firms, IBM, BT (British Telecom) and many, many others.

If it turns out, after a year say, that the expected productivity and revenue enhancements do not eventuate, the AI wave will likely crash. On the other hand, if it turns out that the productivity and revenue enhancements are tangible, it’s possible that there’s more room to run for many of the companies at the heart of these services.

Meta network effects

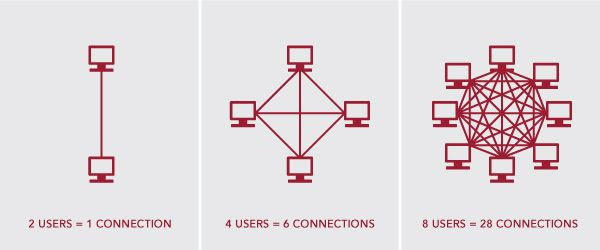

In addition to the various missteps of Twitter, the apparent success of Threads is also attributable to Meta’s Instagram, a platform that has been built on the network effect. At a high-level, the value of a network increases geometrically as the number of connections (users and devices) increase arithmetically, as shown below.

The left number refers to the number of users, the right number is the number of connections (or value, assuming every connection is a unit of value, eg an advertisement or transaction).

Source: Kinesis

Since inception, Loftus Peak has sought to invest in companies benefiting from this principle, valuation permitting. These forces are particularly potent in social media, given they work best when a majority of friends/interests etc are present, leading to a significant moat.

Meta’s launch of Threads highlights the advantage of a large network in the launch of a new product. Investors will know this strategy well by now, given the Fund’s early investment and weighting towards Apple based on the network it has since monetised through its ever-growing services business including Apple Pay and messaging.

The takeaway here should be that in an increasingly connected world, network effects are an almost unassailable advantage, and one that companies that have them can tap into for growth.

Share this Post